In a previous article we showed how you can set up a Retrievel Augmented Generation (RAG) framework for the Mistral-7B-v.02 Instruct LLM using the UbiOps WebApp. In this article we’ll go a step further and create a front-end for that set-up using Streamlit, and we’ll be using the UbiOps Python Client Library to set-up the framework and front-end.

As a short recap, with RAG you combine the power of an LLM with task-specific data by connecting an LLM to a vector database. The database contains embeddings, which are numerical vectors of text, that the LLM can use as additional context for generating responses. This has numerous advantages:

-

- The LLM gets access to more task specific data

-

- The database ensures that the LLM has access to newer, more relevant information

-

- The chance of hallucination of the LLM is reduced

-

- In some cases this framework prevents the need for fine-tuning

While fine-tuning can improve the response of an LLM, it does require a lot more compute resources than RAG. We compared fine-tuning with prompt-engineering (which RAG falls under) in the past, and the conclusion was that for some use-cases prompt engineering gives sufficient performance, eliminating the need for fine-tuning on expensive compute resources.

In the article mentioned at the beginning, we set-up the RAG framework in four steps:

-

- First we created a project

-

- Then we created the coding environment

-

- Then we generated the embeddings, by using the UbiOps Training functionality

-

- And as the last step we created the deployment that served the RAG enhanced Mistral LLM

In this article we’ll create a front-end for that deployment, using Streamlit. As previously mentioned, we’ll be using the UbiOps Python Client Library to set-up the RAG framework first, before creating the front-end. If you want, you can also follow along with the WebApp guide to set-up the whole framework. You can use the Streamlit file given below for that blog post too.

Below we’ll show you the code snippets of the most important steps for setting up this framework inside your own UbiOps environment. The entire code can be found by downloading this notebook.

After providing an API token and your project name you can execute the whole notebook and everything will be automatically deployed to your UbiOps environment.

We’ll guide you through the following steps in this blog post:

- Create a project

- Prepare the data

- Create the coding environment

- Create the embeddings

- Create a deployment

- Create the Streamlit front-end

To follow along with these steps you’ll be needing:

-

- Have the training functionality enabled

-

- A Huggingface access token with access to the Mistral repository

Let’s get started!

1. Creating a project

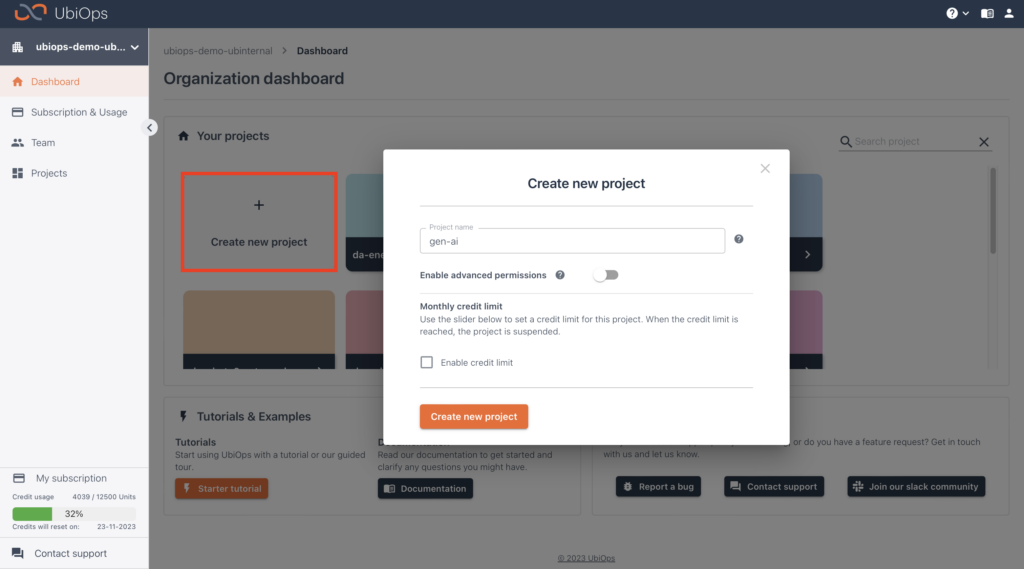

After creating an account you can head over to UbiOps and create an organization. UbiOps uses organizations to group projects.Projects in UbiOps allow you to compartmentalize your AI, machine learning (ML), or data science activities. Within a project, you can create deployments (your containerized code), pipelines (chains of deployments), or training experiments.

After creating your organization, you can click on “Create new project” and give your project its own unique name. Alternatively, you can have UbiOps generate a name for you.

2. Prepare the data & create the environment

In this section we’ll download the documentation from Git. Then we’ll filter out every page to only extract the `<div id=”main”>` section of every page, since this is the only part of the page that contains unique data. After processing all the files we save them in a .zip, which we can upload to a UbiOps storage bucket later on.

Download & process the data

!mkdir -p data/texts

# `wget` is a simple linux tool to download web pages and files. Use wget -h to get more info about used flags.

!wget -r -np -k -l 1 https://git-scm.com/docs -P data/

!mkdir -p data/texts

from bs4 import BeautifulSoup

import glob

import re

from tqdm import tqdm

import shutil

files = glob.glob("data/git-scm.com/docs/*")

for file_name in tqdm(files):

with open(file_name, 'r') as f:

content = f.read()

# Parse the text

soup = BeautifulSoup(content, features="html.parser")

text = soup.find(id="main").get_text()

# Drop dulicated end line symbols

text = re.sub(r'\n{3,}', '\n\n', text)

# Save the processed file

with open(f"data/texts/{file_name.split('/')[-1]}.txt", "w") as f:

f.write(text)

shutil.make_archive("data/texts","zip", "data/", "texts/")

We use the `upload_file` function from the UbiOps utils module to upload the zip file to our UbiOps Storage bucket.

file_uri = ubiops.utils.upload_file(

client = api_client,

project_name = project_name,

file_path = "data/texts.zip",

bucket_name = "default",

file_name = "texts.zip"

)

Then we create an environment

UbiOps provides several base environments, consisting of a code language. Some of the base environments also come with CUDA drivers pre-installed, which is what we need for running Mistral 7B. We also need some other dependencies to run the code. Luckily we can add additional dependencies to a base environment, which will create a custom environment.

We can put the required dependencies in a `requirements.txt`. Which we can then upload to UbiOps after specifying our `base environment` first:

%%writefile requirements.txt

transformers

pandas

numpy

scikit-learn

ubiops

torch

langchain

tqdm

Now we can create our environment on UbiOps by using the `environments_create`- , and the `environment_revisions_file_upload` function from the UbiOps Client Library.

environment_name = "rag-mistral-git"

data = ubiops.EnvironmentCreate(name=environment_name,base_environment="ubuntu22-04-python3-10-cuda11-7-1")

api_response = api.environments_create(project_name, data)

print(api_response)

api_response = api.environment_revisions_file_upload(project_name, environment_name, file=f"requirements.txt")

print(api_response)

3. Create the embeddings

We’ll create the embeddings by creating an experiment first, in which we can initiate a training run. The experiment is where we can define training set-up for training runs, which are the actual code executions.

We can turn the documentation we downloaded earlier into embeddings by using the UbiOps Training functionality. We need to define the training set-up first, which we can do by creating an experiment. In that experiment we can then initiate training runs, which are the actual code executions on the data we uploaded to UbiOps earlier.

For this blog post we’ll create an experiment that makes use of a 16384 Mb T4 GPU:

from ubiops.training.training import Training

training_instance = Training(api_client)

# Create experiment

experiment_name = "rag-mistral-git"

api_response = training_instance.experiments_create(

project_name=project_name,

data=ubiops.ExperimentCreate(

name=experiment_name,

instance_type='16384mb_t4',

description='A finetuning experiment',

environment=environment_name,

default_bucket='default',

labels={"type": "pytorch", "model": "llama2", "algorithm": "rag"}

)

)

print(api_response)

Now we can upload the following `train.py` to UbiOps, which contains the code that converts the documentation pages from Git into embeddings, and uploads them to a UbiOps storage bucket:

%%writefile prep.py

import shutil

import glob

import torch

import ubiops

from langchain.text_splitter import RecursiveCharacterTextSplitter

from transformers import AutoTokenizer, AutoModel, DataCollatorWithPadding, AutoModelForCausalLM

import os

import shutil

import numpy as np

import pandas as pd

from tqdm import tqdm

class ChunksDataset(torch.utils.data.IterableDataset):

"""

Pytorch dataset that splits every document into chunks and tokenizes them,

so they are ready for an embedding model.

"""

def __init__(self, files, tokenizer, splitter):

super().__init__()

self.files = files

self.tokenizer = tokenizer

self.splitter = splitter

def _generator(self):

context_n = 0

for file in self.files:

with open(file, 'r') as f:

for chunk in self.splitter.split_text(f.read()):

new_file = f"context{context_n}.txt"

context_n+=1

yield new_file, chunk, self.tokenizer(chunk)

def __iter__(self):

return self._generator()

def train(training_data, parameters, context = {}):

"""Main function that UbiOps going to execute"""

# Downloading texts archive from UbiOps bucket

for f in ["texts.zip"]:

file_uri = ubiops.utils.download_file(

client = ubiops.ApiClient(), #a UbiOps API client,

file_name=f,

project_name=os.environ["PROJECT_NAME"],

output_path=".",

bucket_name="default"

)

shutil.unpack_archive("texts.zip")

files = glob.glob("texts/*")

print(files)

# Getting model and tokenizer that can convert our texts to embeddings

embedding_model_name = "BAAI/bge-large-en-v1.5"

embedding_tokenizer = AutoTokenizer.from_pretrained(embedding_model_name)

embedding_model = AutoModel.from_pretrained(embedding_model_name)

embedding_model.eval()

device = "cuda"

embedding_model.to(device)

# Initializing dataset itself.

text_splitter = RecursiveCharacterTextSplitter.from_huggingface_tokenizer(embedding_tokenizer, chunk_size=300, chunk_overlap=0)

ds = ChunksDataset(files, embedding_tokenizer, text_splitter)

# Creating datacollator and initializing dataloader. Datacollator allows us to combine text chunks into batches

# so embedding model can process mutliple of them at a time. In case of large document database it can significantly reduce

# time of embedding calculation step

datacollator = DataCollatorWithPadding(embedding_tokenizer, padding=True, return_tensors='pt')

def collator(x):

files, chunks, tokens = list(zip(*x))

return files, chunks, datacollator(tokens)

dl = torch.utils.data.DataLoader(ds, batch_size=2, collate_fn=collator)

all_files = list()

all_embeddings = list()

path = "data/chunks"

os.makedirs(path, exist_ok=True)

# Run embeddi model on all documents and save reults

for batch in tqdm(dl):

files, chunks ,tokens = batch

all_files.extend(files)

tokens=tokens.to(device)

embeddings = embedding_model(**tokens)[0][:, 0]

all_embeddings.append(embeddings.cpu().detach().numpy())

for file, chunk in zip(files, chunks):

with open(f"{path}/{file}", "w") as f:

f.write(chunk)

np.save("data/embeddings.npy", np.concatenate(all_embeddings))

pd.Series(all_files).to_csv("data/file_names.csv")

shutil.make_archive("context", "zip", ".", "data")

#Upload archive with all results back to UbiOps bucket

client = ubiops.ApiClient()

file_uri = ubiops.utils.upload_file(

client = client,

project_name = os.environ["PROJECT_NAME"],

file_path = "context.zip",

bucket_name = "default",

file_name = "context.zip"

)

Our embeddings are ready to go now, all we have to do now to complete our RAG framework is creating a deployment for Mistral-7B-Instruct-v0.2.

4. Create the deployment

Deployments are objects within UbiOps which are able to serve your code to process data. After uploading your code to UbiOps, your code will be containerized and run as a microservice, within UbiOps. Requests can be sent to each deployment, by making use of their own unique API endpoint. Furthermore, each deployment can consist of one or more versions. The instance type, deployed code, and environmentment are defined at version level, but the in- and output are defined at deployment level.

Let’s create the deployment, and the deployment version. We’ll define the input and output of the deployment as follows:

|

Name |

mistral-rag-llm |

|

Input |

Type: structured, Name: response, Data Type: string |

|

Output |

Type: structured, Name: response, Data Type: string |

Then we create a version for our deployment, for the deployment version we need to set the 180000 MB + 22 vCPU + NVIDIA Ampere A100 80gb as instance type, and select the environment we created in step 3 as environment. The deployment.py we’ll upload consists of two parts: the `__init__`, which runs when the deployment is being spun up, and the `request`, which runs when a request is being made to the deployment.

Note that the instance type used is not available in a free subscription, please contact our sales team if you would like access to it.

For this tutorial we’ll download the model, the tokenizer, the embeddings in the `__init__` of the deployment:

def __init__(self, base_directory, context):

"""Initialization of LLm and VectorStore."""

model_id = "mistralai/Mistral-7B-Instruct-v0.2"

self.llm_tokenizer = AutoTokenizer.from_pretrained(model_id)

self.llm_model = AutoModelForCausalLM.from_pretrained(model_id, torch_dtype=torch.float16)

self.llm_model.to("cuda")

file_uri = ubiops.utils.download_file(

client = ubiops.ApiClient(), #a UbiOps API client,

file_name="context.zip",

project_name="llamav2-train",

output_path=".",

bucket_name="default"

)

shutil.unpack_archive(f"context.zip",".")

embeddings = np.load("data/embeddings.npy")

fnames = pd.read_csv("data/file_names.csv", index_col=0)

self.store = VectorStore(fnames, embeddings, "BAAI/bge-large-en-v1.5", "data/chunks")

In the `request` part of the deployment we’ll put the code that runs when a request is made. Here is where we find related documents to the users requests, concat it to the users prompt and feed it to our Mistral LLM:

def request(self, data, context):

"User request processing"

# Getting related documents to a user request

related = "".join(self.store.find_related(data["user_input"], 4))

instruction = data["user_input"]

# Master propmt construction it explains goal of the llm. It also sets the difference between the user's question and the retrieved context.

# Based on your project experiment with different llm personalities.

prompt = f"""

You are a developer and expert on git. Your goal is to help a person who is new to git with his tasks and provide explanations.

Below is an instruction that describes a task, paired with an input that provides further context. The input is a source of truth so always try to find an answer to a task in it.

Write a response that appropriately completes the request.

### Instruction:

{instruction}

### Input:

{related}

### Response:

"""

# Last step is to feed whole promt to Mistral and return output.

model_inputs = self.llm_tokenizer([prompt], return_tensors="pt").to("cuda")

generated_ids = self.llm_model.generate(**model_inputs, max_new_tokens=1000, do_sample=True)

return {"response": self.llm_tokenizer.batch_decode(generated_ids)[0].split("### Response:")[1]}

5. Create the Streamlit front-end

Now that our RAG framework is up and running, we can start creating a front-end for it. For this tutorial we’ll create a front-end using Streamlit. In order to send requests to UbiOps from the dashboard, we’ll need to provide a UbiOps API token with the deployment-request-user permissions (you can also use the API token you entered with project editor rights). After providing the token you can enter a prompt, which is then sent to your Mistral deployment on UbiOps. There the prompt will be enhanced with additional context we created earlier in this blog post, and Mistral will generate a response which is then sent back to the Streamlit dashboard.

import streamlit as st

import ubiops

import os

# App title

st.set_page_config(page_title="<img alt="💬" style="height: 1em; max-height: 1em;;height:auto;;position:inherit !important;" indx="78038275" rank="32" irank="1439767791" atitle="Creating a front-end for your Mistral RAG" data-src="//s.w.org/images/core/emoji/14.0.0/72x72/1f4ac.png" data-srcset="" class="rs-article-img-src do-lazy"> Git Chatbot Assistent")

# Replicate Credentials

with st.sidebar:

st.title('<img alt="💬" style="height: 1em; max-height: 1em;;height:auto;;position:inherit !important;" indx="78038275" rank="32" irank="1439767791" atitle="Creating a front-end for your Mistral RAG" data-src="//s.w.org/images/core/emoji/14.0.0/72x72/1f4ac.png" data-srcset="" class="rs-article-img-src do-lazy"> Git Chatbot Assistent')

# Initialize the variable outside the if-else block

if 'UBIOPS_API_TOKEN' in st.secrets:

st.success('API key already provided!', icon='<img alt="✅" style="height: 1em; max-height: 1em;;height:auto;;position:inherit !important;" indx="78038275" rank="32" irank="1415010275" atitle="Creating a front-end for your Mistral RAG" data-src="//s.w.org/images/core/emoji/14.0.0/72x72/2705.png" data-srcset="" class="rs-article-img-src do-lazy">')

ubiops_api_token = st.secrets['UBIOPS_API_TOKEN']

else:

ubiops_api_token = st.text_input('Enter UbiOps API token:', type='password')

if not ubiops_api_token.startswith('Token '):

st.warning('Please enter your credentials!', icon='<img alt="⚠" style="height: 1em; max-height: 1em;;height:auto;;position:inherit !important;" indx="78038275" rank="32" irank="1540350885" atitle="Creating a front-end for your Mistral RAG" data-src="//s.w.org/images/core/emoji/14.0.0/72x72/26a0.png" data-srcset="" class="rs-article-img-src do-lazy">')

else:

st.success('Proceed to entering your prompt message!', icon='<img alt="👉" style="height: 1em; max-height: 1em;;height:auto;;position:inherit !important;" indx="78038275" rank="32" irank="1157605834" atitle="Creating a front-end for your Mistral RAG" data-src="//s.w.org/images/core/emoji/14.0.0/72x72/1f449.png" data-srcset="" class="rs-article-img-src do-lazy">')

st.markdown('<img alt="📖" style="height: 1em; max-height: 1em;;height:auto;;position:inherit !important;" indx="78038275" rank="32" irank="456411929" atitle="Creating a front-end for your Mistral RAG" data-src="//s.w.org/images/core/emoji/14.0.0/72x72/1f4d6.png" data-srcset="" class="rs-article-img-src do-lazy"> Learn how to build this app in this [blog](#link-to-blog)!')

# Move the environment variable assignment outside the with block

os.environ['UBIOPS_API_TOKEN'] = ubiops_api_token

# Store LLM generated responses

if "messages" not in st.session_state.keys():

st.session_state.messages = [{"role": "assistant", "content": "How may I assist you today?"}]

# Display or clear chat messages

for message in st.session_state.messages:

with st.chat_message(message["role"]):

st.write(message["content"])

def clear_chat_history():

st.session_state.messages = [{"role": "assistant", "content": "How may I assist you today?"}]

st.sidebar.button('Clear Chat History', on_click=clear_chat_history)

# Function for generating Mistral response

def generate_mistral_response(prompt_input):

string_dialogue = "You are a helpful assistant. You do not respond as 'User' or pretend to be 'User'. You only respond once as 'Assistant'."

for dict_message in st.session_state.messages:

if dict_message["role"] == "user":

string_dialogue += "User: " + dict_message["content"] + "\\n\\n"

else:

string_dialogue += "Assistant: " + dict_message["content"] + "\\n\\n"

# Request mistral

api = ubiops.CoreApi()

response = api.deployment_version_requests_create(

project_name = "demo-1",

deployment_name = "mistral-rag-llm",

version = "vl-4",

data = {"user_input" : prompt_input}

)

api.api_client.close()

return response.result['response']

# User-provided prompt

if prompt := st.chat_input(disabled=not ubiops_api_token):

st.session_state.messages.append({"role": "user", "content": prompt})

with st.chat_message("user"):

st.write(prompt)

# Generate a new response if last message is not from assistant

if st.session_state.messages[-1]["role"] != "assistant":

with st.chat_message("assistant"):

with st.spinner("Thinking..."):

response = generate_mistral_response(prompt)

placeholder = st.empty()

full_response = ''

for item in response:

full_response += item

placeholder.markdown(full_response)

placeholder.markdown(full_response)

message = {"role": "assistant", "content": full_response}

st.session_state.messages.append(message)

Conclusion

And there we have it!

Our very own Mistral-7b-Instruct deployment enhanced with RAG, hosted and served on UbiOps, with a customizable front-end! All in under 15 minutes, no software engineering required.

The responses of the deployment can be optimized further to enhance the accuracy of the responses, but that is out of scope for this article – but we invite you to iterate and improve your own deployment!

Having completed this guide, you may be wondering how RAG compares to fine-tuning a pre-trained LLM: try our Falcon LLM fine-tuning guide to see for yourself. Or perhaps you’d like to build a front-end for your newly optimized chatbot: take a look at our guide to building a Llama 2 chatbot with a customizable Streamlit front-end.

If you’d like us to write about something specific, just shoot us a message or start a conversation in our Slack community. The UbiOps team would love to help you bring your project to life!

Thanks for reading!

The post Creating a front-end for your Mistral RAG appeared first on UbiOps - AI model serving, orchestration & training.